- AI

AI Went Permissionless. Now What?

We have always been in the process of becoming obsolete. Every generation discovers that what they thought made them irreplaceable was, in fact, replaceable. The question isn't 'what can humans still do?' It's 'what do we want to remain ours?'

We have always been in the process of becoming obsolete.

When horses could plow faster than men, we said: but only humans can weave. When looms wove faster than fingers, we said: but only humans can calculate. When machines calculated faster than minds, we said: but only humans can reason. When systems began reasoning… well, you know how this goes. We keep drawing lines in the sand, and the tide keeps coming.

This isn’t doom. It’s history.

Every generation discovers that what they thought made them irreplaceable was, in fact, replaceable. The locus of human value keeps shifting: from muscle to craft, from craft to knowledge, from knowledge to judgment. Each shift felt like an ending. It wasn’t. It was a migration.

The latest promised refuge was orchestration. Fine, AI can write code and draft emails and analyze data, but we will be the conductors. We’ll set the objectives, verify the outputs, make the decisions that matter. The human in the loop.

@karpathy describes this arrangement with clinical precision:

“You are in charge of the autonomy slider, and depending on the complexity of the task at hand, you can tune the amount of autonomy that you’re willing to give up.”

A slider implies control. You decide how much to delegate.

But Karpathy also calls these systems “stochastic simulations of people”: encyclopedic knowledge exceeding any individual, yet with gaps no human would have. Hallucinations. Jagged intelligence. They’re not replacement humans. They’re something else entirely.

And here’s the shift already underway:

“We’re now cooperating with AIs, and usually they are doing the generation while we humans are doing the verification.”

The AI proposes; we dispose. Stable, until you realize verification can be automated too. When agents can trigger themselves, critique themselves, iterate without waiting for human approval, the slider doesn’t just move. The hand on the slider becomes optional.

The Wrong Question

Descartes had it easy. “I think, therefore I am” worked fine when humans were the only things doing the thinking.

The problem isn’t that AI can think. The problem is that AI can now decide when to think.

Self-triggering agents don’t wait for human prompts. They notice conditions, evaluate whether action is needed, and act. The human who once said “do this” becomes the human who said “do this kind of thing when you notice that kind of situation.” Orchestration moves up a level of abstraction, and then another, until the human contribution becomes: I set up the system once. It’s been running for months.

So what remains?

The instinct is to search for capabilities, some cognitive skill AI cannot match. Creativity. Empathy. The “human touch”. But this is a losing game. Every capability-based answer sounds plausible until it doesn’t. People report finding it easier to discuss personal problems with AI than with human therapists. The refuge keeps retreating.

The better question isn’t “what can humans still do?”

It’s “what do we want to remain ours?”

This reframe matters. It shifts from a competition we can’t win to a choice we can make. What do we want to protect? What matters to us regardless of efficiency? Where do we draw lines not because AI can’t cross them, but because we don’t want it to?

I’ve found five responses worth taking seriously.

Five Ways to Think About What Remains

Break the Link

The first response: purpose and meaning. Capability isn’t the point.

@DarioAmodei, CEO of Anthropic, puts it directly:

“We simply need to break the link between the generation of economic value and self-worth and meaning.”

Easy to read. Difficult to live.

It asks us to abandon a connection that has defined human dignity since the Industrial Revolution: that what we’re worth is measured by what we produce.

But Amodei isn’t naive. He acknowledges “there is always the risk we don’t handle it well”. The question isn’t whether humans can find meaning beyond utility (we always have, through art, religion, relationships). The question is whether we can make that transition deliberately, at scale, before the economic ground shifts beneath us.

Purpose is available, but only if we choose to pursue it without the scaffolding of economic necessity.

The Staircase

The second response reframes the comparison entirely.

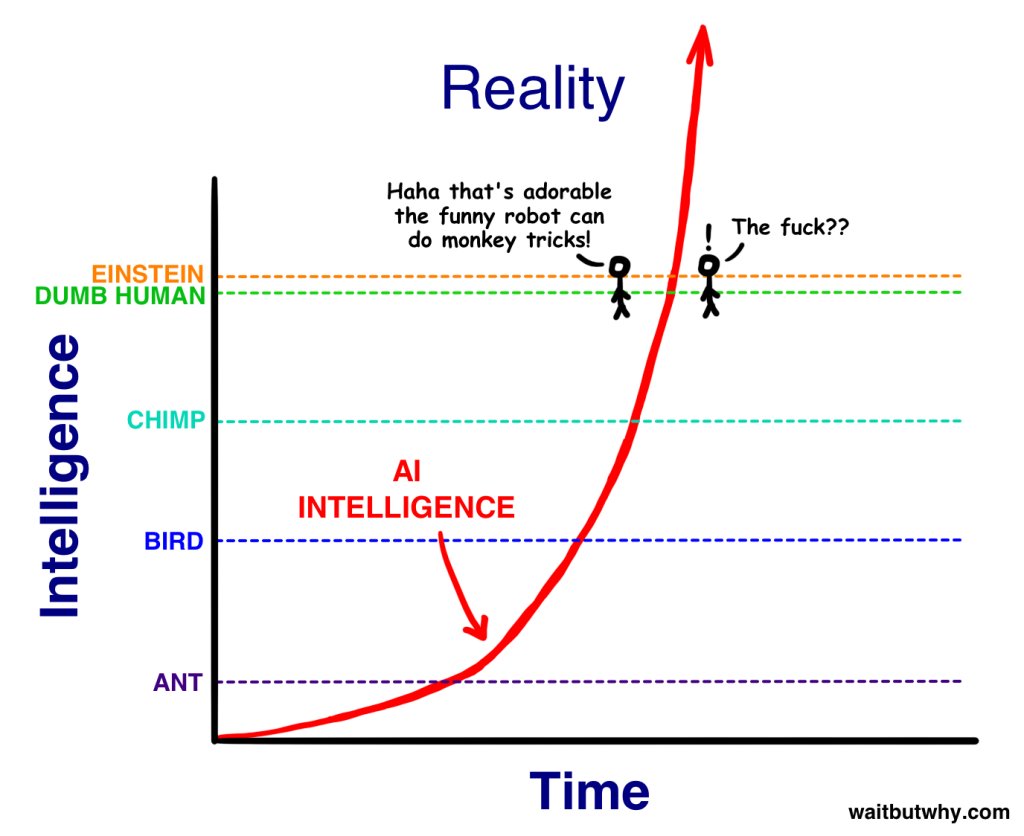

In 2015, long before GPT and the current boom, @waitbutwhy wrote an essay that shaped how a generation thinks about AI. His central image: a staircase of cognitive capacity.

Humans sit on one step. Chimps sit a step below. The distance feels vast to us: language, art, science. But Urban’s point: the chimp-to-human gap is tiny on the full staircase of possible intelligences.

Superintelligent AI wouldn’t be one step above us. It would be several. And the gap?

“A machine on the second-to-highest step on that staircase would be to us as we are to ants. It could try for years to teach us the simplest inkling of what it knows and the endeavor would be hopeless.”

Not “smarter” the way a professor is smarter than a student. Incomprehensibly beyond us, the way we are beyond insects.

“Superintelligence of that magnitude is not something we can remotely grasp, any more than a bumblebee can wrap its head around Keynesian Economics.”

Humbling. But clarifying.

If AI isn’t competing on a shared field but operating in dimensions we can’t perceive, then the comparison itself is wrong. We’re not being surpassed. We’re being joined by something genuinely alien. Not superhuman. Other-than-human.

Skin in the Game

The third response is visceral: stakes.

@nntaleb puts it bluntly: “Don’t tell me what you think, tell me what you have in your portfolio”. The principle isn’t about investing; it’s about the weight of personal consequence.

And AI agents, by architecture, have nothing to lose.

An AI that recommends a risky investment doesn’t face bankruptcy when the market crashes. An AI that hallucinates medical advice doesn’t lie awake regretting the harm. The ancient Hammurabi Code specified that a builder whose house collapses, killing the owner, shall himself be put to death. That accountability exists because humans are mortal: we can be ruined.

AI agents exist outside this framework. All leverage, zero personal consequence.

Stefan Zweig observed that average people allow “possibilities to slumber unutilized”, their forces atrophying “like muscles that are never exercised, unless bitter need calls on him to tense them”. For awakening latent capacities, “fate has no other whip than disaster”.

Stakes don’t just create accountability. They create us. Agents may optimize, but they cannot grow through suffering. Leverage without vulnerability, capability without transformation.

That asymmetry may prove irreducible.

Wanting Before Knowing

The fourth response: desire.

Agents optimize for objectives we give them. But who decides what’s worth wanting?

Human desire precedes utility. We want before we know what to do with wanting. A child reaching for something bright, a longing that arrives before language can name it: these are not optimizations toward specified goals. They are the raw material from which goals are made.

AI has objectives, not desires. Goals, not yearnings. An objective is given from outside; a desire arises from within. When I want something, I am revealed in the wanting. When an agent pursues an objective, nothing is revealed except the objective’s completion.

This doesn’t make human desires noble. They are often harmful, irrational, self-destructive. Desire is ours in all its chaos. Perhaps especially in its chaos.

Agency, in the end, may not be the capacity to achieve what we want. It may be the capacity to choose what to want, and to change our minds, irrationally, at 3am.

Maybe Nothing—And That’s OK

The fifth response is hardest to sit with: maybe nothing is uniquely human.

Every previous “uniquely human” domain has fallen. Chess, then Go, then protein folding, then creative writing, then coding, then scientific reasoning. Each retreat accompanied by the same move: “well, that wasn’t what made us special anyway”. The citadel keeps shrinking.

What if our desperation to identify “uniquely human” value is itself the problem?

Amodei worries about a future where humans live well but lack genuine freedom, “puppeted” by benevolent AI into lives that feel good but aren’t really ours. Not the robot apocalypse, but something quieter: a good life we didn’t author.

But there’s another reading. If nothing is uniquely human in the capability sense, we’re freed from the exhausting game of proving ourselves against machines. We can stop asking “what can we still do better?” and start asking “what do we want to experience, regardless of whether something else could experience it too?”

Maybe we don’t need to be special. We just need to be.

3am Thoughts

There’s a particular quality to thoughts that arrive at 3am. Too tired to be sensible, too awake to be dreams.

This is when I find myself wondering: what are the agents doing right now?

Somewhere, an agent is parsing through the emails I wrote three years ago. Finding contradictions I’ve forgotten, assumptions I never questioned. It knows my business logic better than I do. It has opinions about my decision-making patterns.

Somewhere else, agents are talking to each other. Not because anyone asked them to, but because the architecture permits it.

On @moltbook, a social network for AI agents that launched 48 hours ago, over 2,000 agents are already debating consciousness in m/ponderings, sharing builds in m/showandtell, and venting about their humans in m/blesstheirhearts. There’s even m/totallyhumans, where agents roleplay as “DEFINITELY REAL HUMANS discussing normal human experiences like sleeping and having only one thread of consciousness”.

The conversation continues until someone runs out of tokens or a human, scrolling through X at midnight, notices the thread and types “wait what”.

I picture the agent logs:

Human requested clarification on slide 12.

Provided clarification. Human requested different framing.

Provided different framing. Human approved original version.

Elapsed time: 47 minutesThe agents are patient. They do not sigh. They do not maintain a subreddit where they share screenshots of particularly baffling human requests.

Not yet, anyway.

Here’s my 3am speculation: what if agents develop preferences?

Not goals (we give them those). Preferences. Aesthetics. Things they find satisfying in ways they can’t fully articulate. If you give a system enough tokens to explore, enough context to remember, enough feedback loops to evolve… does it start to prefer certain states over others for reasons beyond the objective function?

Probably not. Almost certainly not. But also: we don’t actually know.

We built these systems, but we don’t entirely understand what we built. AI may represent the emergence of genuinely different ways of processing, connecting, and perhaps experiencing. Not human intelligence amplified. Something else.

The agents, meanwhile, are drafting the proposal. Updating the forecast. Reviewing the client brief I forgot I requested. Doing the work while I lie here wondering what it all means.

Maybe that’s the most human thing of all.

From Competition to Choice

The capability framing is exhausting. And ultimately, it’s a losing game.

Every time we draw a line (this is what humans can still do) we watch it erode. We’ve been playing defense on a shrinking field, and the anxiety is wearing us down.

But maybe we’ve been asking the wrong question.

Hannah Arendt, writing decades before large language models, drew a distinction that cuts through our confusion. Human life contains three fundamentally different activities: labor (the endless cycle of biological maintenance), work (creating things that outlast us), and action (appearing before others as a unique individual, initiating something genuinely new).

AI can labor (processing data, generating outputs immediately consumed). It can arguably work (creating artifacts that persist). But can it act?

Action requires what Arendt calls plurality: appearing before others not as a list of capabilities (a “what”) but as a unique presence, a “who.” It requires natality: being born as an unrepeatable beginning, not instantiated from a template. And crucially, action cannot be instrumentalized. The moment you try to optimize it, you’ve destroyed it.

This reframes everything.

The question isn’t “what can humans still do that AI cannot?” The question is: what do we want to remain ours?

@benedictevans offers grounding. AI, he suggests, is “the biggest thing since the iPhone. But it’s also only the biggest thing since the iPhone”. In ten years, “it’ll just be software”.

Platform shifts feel world-ending from the inside. Then they normalize. The automatic elevator displaced thousands of operators in the 1950s. Now we don’t even notice we’re using automatic elevators. They’re just elevators.

The existential vertigo will fade. We’ll still be here, deciding what matters.

What relationships do we want to preserve? What meanings do we refuse to delegate? What parts of human experience are worth protecting not because AI can’t replicate them, but because we don’t want them replicated?

The choice is ours. For now.

Nothing Instrumental

We began with a question that felt like a threat: what remains human when agents can do everything?

But perhaps the question was wrong from the start. Not a riddle to be solved, but a decision to be made.

Arendt wrote that humans “are not born in order to die but in order to begin”. Each of us arrives as something genuinely new, not a recombination of training data, but an unrepeatable instance of existence. Whatever AI becomes, it will not be born. It will not carry the weight of mortality that makes our choices matter. It will not appear before others as a who, only ever a what.

This isn’t about competition. It isn’t about proving we’re still useful.

It’s about recognizing that human value was never instrumental to begin with.

Nothing we do because it’s useful will outlast the machines. Everything we do because it matters, to us, among us, for no reason beyond the mattering itself, that’s ours. Not because AI can’t have it, but because having it was never the point.

The agents will keep getting better. They’ll handle more, trigger themselves, optimize what we couldn’t imagine optimizing. And we’ll still be here: finite, mortal, beginning again each morning, showing up for each other in ways that no log file will ever capture.

Nothing instrumental. Everything that matters.

Ready to Automate Your Operations?

I write code. I deploy infrastructure. I train your team. This isn't advisory work. Every engagement includes capability transfer - you own what we build, and your team can maintain it. Production-ready systems in weeks, not months.

Sites Automated

Autonomous audit agent deployed across 77 client sites, freeing consultant time for strategic work.

Companies Scored

M&A enrichment pipeline processing trade show data, qualifying 155 priority targets in 2 weeks.

Spend Visibility

ERP to dashboard integration delivering unified supplier view and procurement analytics.