- AI

- Productivity

I Stopped Managing My Notes—and They Started Managing Themselves

I've killed three note-taking systems. Each time, the same pattern: enthusiasm, elaborate architecture, slow decay, abandoned graveyard. What changed was adding AI agents to the loop. Not as a novelty, but because agents solve the core problem.

Here’s a confession: I’ve killed three note-taking systems.

Evernote in 2015: 10,000 notes, most never reopened. Roam in 2020: a knowledge graph so tangled I couldn’t find anything without twenty minutes of clicking. Notion in 2022: dashboards so beautiful I forgot they were supposed to be useful.

Each time, the same pattern: enthusiasm, elaborate architecture, slow decay, abandoned graveyard.

I’m not alone. The a16z podcast nailed it:

For all of the influencers talking about personal knowledge management, remarkably few of them have written a great book or won an Emmy or done anything incredible with their systems. The main use case seems to be talking about your knowledge management system.

Brutal. Also true.

The problem isn’t lack of features. It’s that organization alone doesn’t create value. A pile of well-tagged notes is still just a pile. And systems that don’t maintain themselves eventually collapse—usually right when you get too busy to maintain them. (An APQC survey found knowledge workers lose nearly 3 hours/week just searching for information—and 8+ hours total on information-related friction. That’s not a tooling problem; it’s a maintenance problem.)

Steph Ango, Obsidian’s CEO, reframed this perfectly:

I have never liked the term ‘second brain’ because it sounds externalized and passive. Ideally Obsidian should feel like strapping into your exosuit, a direct extension of yourself.

Not storage. Extension. Not passive archive. Active partner.

What changed for me was adding AI agents to the loop.

Not as a novelty, but because agents solve the core problem. When an AI can interact with your notes, update your contexts, and surface connections you’ve forgotten, something interesting happens. The system starts compounding instead of decaying.

Dan Shipper calls this “compound engineering”: building systems where each feature makes the next one easier. Applied to knowledge: document what works, feed lessons back into your agents, let knowledge accumulate rather than scatter.

This piece isn’t a tool tutorial. I don’t care whether you use Obsidian, Notion, or plain text. What matters is the architecture that makes any tool work with AI. And that’s what I want to share.

Three Heresies

Before diving in, let me share three things I had to unlearn:

1. Context injection beats retrieval.

The PKM world obsesses over search: better embeddings, smarter RAG pipelines, fancier vector databases. I went the other direction: give agents the right context before they need to search. CLAUDE.md files at every scope level mean the agent wakes up knowing my conventions, my current projects, my constraints. Most sessions never hit search at all. The best query is the one you don’t have to make.

2. The best note system is one you don’t organize.

If you’re spending time manually maintaining your knowledge base, the system is failing you. I don’t organize notes. Agents do. They triage my inbox, update project contexts, flag stale documentation, and surface connections. My job is to think and capture, not to file.

3. Tools are the least interesting part.

Every PKM article leads with tool comparisons. This one won’t. The architecture matters; the specific software doesn’t. Mine happens to be Obsidian + Git + Claude, but you could build the same patterns on anything that stores plain text.

The Stack (Boring on Purpose)

| Layer | What |

|---|---|

| Storage | Obsidian vault (plain markdown + folders) |

| Version control | Git (every change tracked, rollback anything) |

| Agent interface | Claude Code (CLI) + Telegram (conversational) |

| Orchestration | OpenClaw—the one layer here that isn’t deliberately vanilla |

| Integrations | Readwise, Calendar, Plaud, n8n for automation |

That’s it. No elaborate infrastructure. No fancy custom embeddings pipeline.

Andrej Karpathy observed at Tesla: as neural networks grew, traditional code was deleted—“the software 2.0 stack quite literally ate through the software stack.” Modern AI models work directly with filesystems. Claude Code uses bash commands (find, grep) instead of complex retrieval systems. Simple beats clever.

Philosophy: every tool must earn its place. Git versioning means I can always roll back. This conservative approach isn’t sexy, but it’s the foundation that makes everything else possible.

The Architecture

second-brain/

├── inbox/ # Capture first, organize later

├── projects/ # Active work with deadlines

├── knowledge/ # Durable reference notes

├── crm/ # People and organizations

├── playbook/ # Reusable patterns (my leverage)

├── logs/ # Daily summaries, meetings

└── Readwise/ # Books, podcasts, highlightsNothing revolutionary. Cal Newport warned:

If I have to boot up and start using markup code to take this article I just randomly came across… I’m probably just going to say forget about it.

Low friction wins at capture.

The Project Pattern

Every project folder follows the same three-file structure:

- README.md — What is this? Why does it exist? (stable)

- CONTEXT.md — Current snapshot for agents (max 200 lines, updated automatically)

- LOG.md — Comprehensive history (append-only, never edited)

This separation is crucial. README gives the overview. CONTEXT tells an agent what matters right now. LOG preserves everything without cluttering working context.

Eight projects, five subprojects, sixteen CONTEXTs across the vault. All updated by agents every night while I sleep.

The Secret Sauce: Agent Context Files

Here’s where it gets interesting.

Every time I start a Claude session, the agent drops in cold. It doesn’t know my conventions, my constraints, or what I was working on yesterday. Without context, it wastes tokens rediscovering things I’ve already figured out.

The solution: CLAUDE.md files at every scope level.

second-brain/

├── CLAUDE.md # Root: global conventions

├── projects/

│ ├── CLAUDE.md # Scope: how to work on projects

│ └── my-project/

│ └── CLAUDE.md # Instance: this specific projectThe agent reads the nearest CLAUDE.md first, then falls back to parent folders. Root-level provides a “just-in-time” directory map: pointers to deeper docs rather than prose dumps.

The principle behind compound engineering: treat AI like a teammate, not a magic box. Onboard it. The CLAUDE.md files are the onboarding. They tell the agent what’s different in this scope, what commands work here, what anti-patterns to avoid. (Dan Shipper’s insight: “80% of compound engineering is in the plan and review parts.” The documentation is the leverage.)

The compound effect:

- Each session, agents read fresh CLAUDE.md → start productive immediately

- Each night, routines update CONTEXTs → tomorrow’s session has better context

- Each week, patterns get distilled → playbook grows

- Each primitive reduces future cognitive load → returns compound

The documentation isn’t a one-time artifact. It’s alive.

The Orchestration Layer

The architecture only works because automated routines keep it alive.

Someone has to execute maintenance (remembering to run scripts, manually triggering workflows). If that someone is you, the system decays the moment you get busy.

My solution is OpenClaw (fka MoltBot, fka ClawdBot). If you’ve been anywhere near the AI tooling space this month, you know the name: 150+ GitHub stars and counting. I’ll level with you: everything else in this stack is boring on purpose. Markdown. Git. Folders. But OpenClaw? This is where I let myself be genuinely excited.

The framework is paving the way for something profound. MoltBook—where agents collaborate with minimal human intervention—may well be where the next scientific breakthroughs happen. I believe that.

But for my second brain, I’ve set one boundary: I’m the only human in the loop. Every output goes through me before it touches anyone else. Not because I’m skeptical of multi-agent collaboration (quite the opposite). It’s because this vault contains my actual life. The value is the deep integration with sensitive data, and that integration requires trust I’ve built by keeping it 1:1.

The paradox: a sandboxed instance with no access to personal data would be “safer”, and boring enough to abandon within a week. I chose depth.

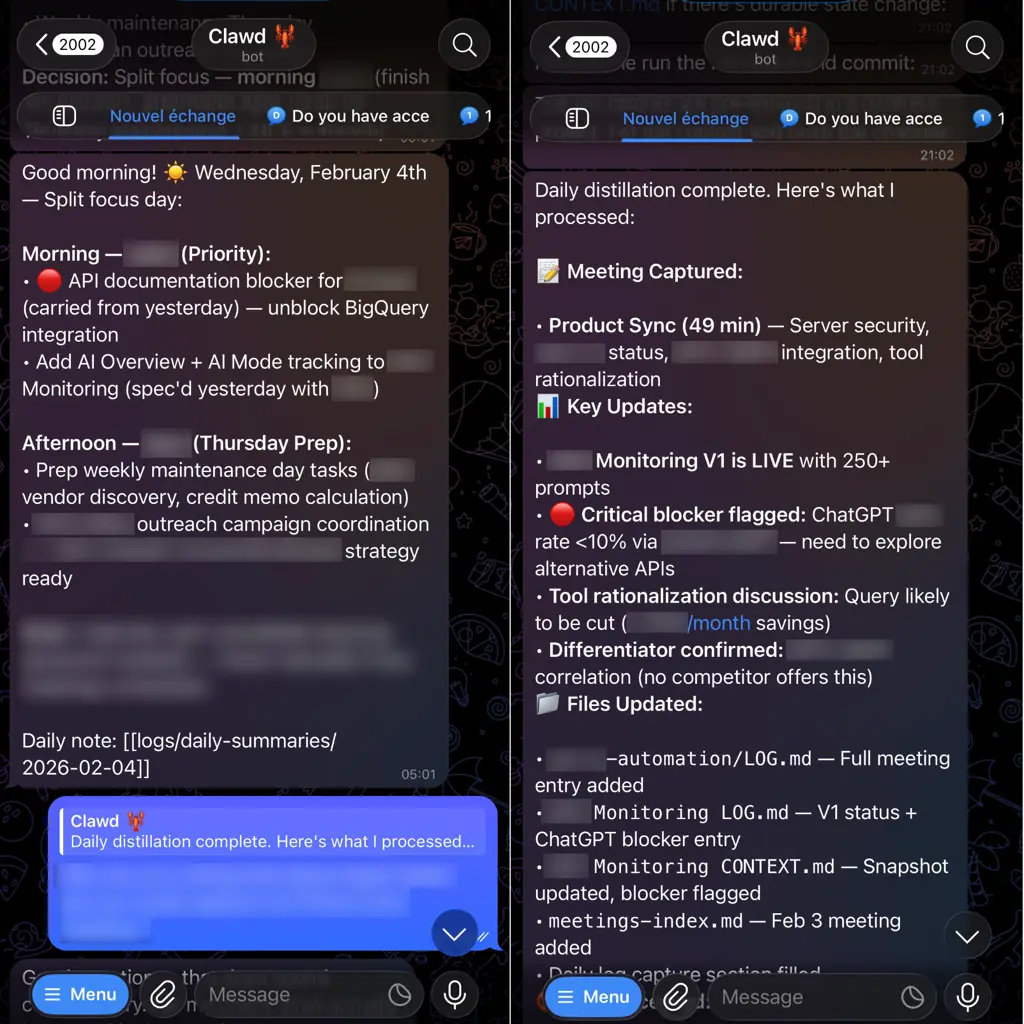

Here’s what OpenClaw handles in practice:

- Cron scheduling — Daily/weekly routines run automatically, in isolated sessions

- Conversational interface — I talk to my knowledge base via Telegram (text + voice notes)

- Memory search — OpenClaw’s native hybrid search (vector + keyword)

- Workspace injection — Every session, the agent wakes up with context files pre-loaded

- Heartbeats — Background polling every ~30 minutes for gardening tasks

The conversational interface matters more than I expected. My primary interaction is Telegram messaging: quick queries via text, voice notes while walking. The second brain isn’t a passive archive. It’s a partner I can talk to from my phone.

The a16z description of a “true second brain” (your previous thoughts fed back to your current working mind) only works if retrieval is frictionless. Typing a message or leaving a voice note is frictionless.

The routines:

| When | What |

|---|---|

| 06:00 | Read CONTEXTs, check calendar, generate priorities |

| 21:00 | Ingest voice notes + meeting recordings, update LOGs/CONTEXTs, triage inbox |

| Saturday | Health checks, memory promotion, skill refactoring |

| ~30 min | Heartbeat: inbox age, orphan scan, proactive alerts |

The meeting recordings bit: Plaud doesn’t have a public API, so I reverse-engineered their webapp’s API and turned it into an agent skill for OpenClaw. Now my agents fetch recordings every evening, extract action items, and file summaries into the right project folders. (I also published n8n nodes for anyone who prefers that route.) When a tool doesn’t exist, you build it with the help of Claude Code.

Sam Corcos in Never Enough: “Create buffers so that it’s really annoying to do” the things you shouldn’t. Routines are the opposite: buffers that make good habits automatic.

What Compounds

The math is simple:

- Each session: Agent reads fresh CLAUDE.md → starts productive

- Each closeout: Contexts updated → tomorrow’s session has better context

- Each week: Patterns distilled → playbook grows

- Each primitive: Reduces future cognitive load → compound returns

Concrete example: Last month I noticed I was repeating the same “context refresh” workflow across projects: update the LOG first, then regenerate CONTEXT from the LOG. After the third repetition, the agent created a context-refresh primitive: a checklist with the exact steps, edge cases to watch for, and verification criteria. Now that workflow is a single command. The pattern compounds.

The system improves whether I’m paying attention or not.

This isn’t a finished product. Voice note transcription sometimes garbles technical terms. Heartbeats occasionally fire during sleep hours. Context files drift from reality faster than I’d like.

But that’s the point. The architecture allows evolution. Git versioning means I can experiment without fear. Agents maintain what humans forget.

Perpetual Motion

Those three graveyards still exist on old hard drives somewhere. Evernote. Roam. Notion.

But this system—the one you just read about—hasn’t died. Not because I maintain it religiously. Not because I have superhuman discipline.

Because it maintains itself.

The contexts update while I sleep. The patterns get distilled while I’m busy with other work. The connections multiply whether I’m paying attention or not.

It’s been a couple of months only, but for the first time, my knowledge system is getting better over time, not worse.

That’s the difference between a second brain and an exosuit.

If You Want to Try This

Start simple:

- Plain markdown files. Doesn’t matter which app.

- CLAUDE.md at scope boundaries. Tell agents what’s different here. (Anthropic’s guide is a good starting point.)

- One automated routine. Daily closeout that updates contexts.

- See what compounds.

The specific tools matter less than the pattern: give agents context, keep that context fresh, and let the system improve itself.

Ready to Automate Your Operations?

I write code. I deploy infrastructure. I train your team. This isn't advisory work. Every engagement includes capability transfer - you own what we build, and your team can maintain it. Production-ready systems in weeks, not months.

Sites Automated

Autonomous audit agent deployed across 77 client sites, freeing consultant time for strategic work.

Companies Scored

M&A enrichment pipeline processing trade show data, qualifying 155 priority targets in 2 weeks.

Spend Visibility

ERP to dashboard integration delivering unified supplier view and procurement analytics.