- AI

We Didn't Build a Machine That Thinks. We Built One That Reads

Classic AI tried to program intelligence top-down. LLMs learned it bottom-up — by reading an enormous portion of what humans have written. That's not a technical detail. It might be the most important philosophical observation of our era.

Classic AI tried to program intelligence top-down.

LLMs learned it bottom-up — by reading an enormous portion of what humans have written.

That’s not a technical detail. It might be the most important philosophical observation of our era.

The Failed Bet

For decades, the dominant bet in artificial intelligence was that we could engineer thinking. Build the right decision trees. Write enough rules. Model enough logic. Projects like MYCIN encoded hundreds of rules to diagnose infections. CYC tried to hand-code all of common sense into a single knowledge base. The vision was a machine that reasoned the way we imagined we reason: step by step, rule by rule, from first principles.

It didn’t work.

Expert systems were brittle. They performed well inside their narrow domains and broke down the moment they encountered anything outside the rules. MYCIN could diagnose bacterial infections with respectable accuracy — but it couldn’t tell you that a patient was dead. Symbolic AI couldn’t handle ambiguity. Every attempt to hand-code common sense collapsed under its own complexity.

Then something unexpected happened.

We fed machines enormous quantities of text — books, conversations, papers, arguments, code, poetry — and told them to predict the next word. No rules. No logic trees. No hand-coded ontologies. Just pattern recognition on language, at scale.

And they started to reason. Not always reliably, but unmistakably. They could explain, infer, and argue. They could draw analogies across domains, summarize complex positions, and translate between registers. They could do things we had spent fifty years trying to program and failing.

The question worth sitting with is: why did this work?

Language as Stored Intelligence

Here’s the strong version of the argument.

Language is not just a communication tool. It’s externalized cognition: the medium in which humans have been doing their most rigorous thinking for thousands of years.

When a philosopher writes an argument, they’re not just expressing a thought. They’re crystallizing a reasoning pattern into a form that can be transmitted, challenged, and refined. When a scientist writes a paper, when a lawyer constructs a brief, they are depositing cognitive work into language.

Over millennia, this accumulates. The corpus of human text is not a collection of words. It’s a collection of thinking, structured and layered over generations. Arguments that survived scrutiny. Explanations that actually clarified. Every generation refining and extending the cognitive work of the last.

The Evidence Beyond Metaphor

The evidence runs deeper than metaphor. Edward Sapir wrote in 1929 that human beings “do not live in the objective world alone… but are very much at the mercy of the particular language which has become the medium of expression for their society.” Modern research bears this out.

Lera Boroditsky at UCSD has shown that Russian speakers, who have separate words for light blue (goluboy) and dark blue (siniy), discriminate between those shades faster when the colors cross the goluboy/siniy boundary. Mandarin speakers, who represent time vertically, think about temporal sequence differently. Aboriginal Kuuk Thaayorre speakers, who use absolute compass directions instead of “left” and “right,” organize time differently still.

Language doesn’t just carry ideas. It structures them. It shapes what we can perceive and how we reason about it.

The Cognitive Fossil Record

So when LLMs train on text, they are not learning language in the superficial sense. They are absorbing the cognitive patterns embedded in language: the structures through which humans have processed reality for millennia. Every text is a deposit of reasoning. The entire corpus is a cognitive fossil record.

This reframes the whole achievement. We didn’t program a machine that thinks. We built a machine that absorbed how humans think, because humans had been writing it down all along. The training data was never just data. It was the externalized mind of our species.

The Greeks Already Knew

In ancient Greek, the word logos means both “word” and “reason.” Not by accident.

The root verb legein originally meant “to collect, to gather.” The metaphor is revealing: reasoning is a gathering of ideas into order, and language is the vehicle for that gathering. In Homer, logos meant “what is said”: a narrative, a discourse. By the time Heraclitus used it five centuries before Christ, it had become the rational structure of the cosmos itself. The word evolved from “what is said” to “what is true.”

Heraclitus wrote: “Listening not to me but to the logos, it is wise to agree that all things are one.” Knowledge came not from any individual speaker but from the logos: the pattern underlying all things. Aristotle later formalized the concept, logos as persuasion through “the speech itself, in so far as it proves or seems to prove.” The words used and the reasoning they embody were, for the Greeks, the same thing.

Test this yourself. Try to think through a complex argument without words. At some point, you need to articulate. You need to name things, sequence claims, draw distinctions. The thought doesn’t exist fully until you find the words for it. Language isn’t just how you report your thinking. It’s how you do your thinking.

The entire project of conventional AI was an attempt to build reason without language. Pure logic. Formal systems. Mathematical structures. It was an attempt to separate the two halves of logos, to keep the reason and discard the word.

LLMs suggest that was always the wrong order. You don’t get to reason by programming rules. You get to reason by immersing yourself in the accumulated product of reasoning, which is language. The word came first. The reason followed.

The Greeks intuited this. We spent fifty years ignoring it. The machines brought us back.

The Honest Counterpoint: AlphaGo

This argument has a real challenge, and it deserves a real answer.

In March 2016, DeepMind’s AlphaGo defeated Lee Sedol, the eighteen-time world champion, in a five-game Go match in Seoul. In Game 2, it played Move 37, a shoulder hit on the fifth line that had a one-in-ten-thousand probability of being played by a human. Professional commentators dismissed it. “It’s just bad,” one said. It turned out to be the decisive move of the game. Fan Hui, himself a professional, called it “not a human move” and “beautiful.” A move new to the thousands-of-years-long history of Go.

The following year, AlphaGo Zero went further. It learned Go entirely through self-play, starting from random moves with zero human game data. After three days of training, it beat the version that had defeated Lee Sedol: one hundred games to zero. Not a single loss. No language. No text. No human reasoning corpus. Just numbers, board positions, and reward signals.

So language isn’t strictly necessary for intelligence. Not all of it.

Narrow vs. General

But the distinction that matters is this: AlphaGo is narrowly superhuman. It discovered Move 37, but it cannot explain why Move 37 works. It cannot transfer its Go ability to chess, or diplomacy, or any other domain. It cannot reason about whether the game itself is worth playing. Discovering a brilliant move and understanding why it is brilliant are different capacities.

Narrow domains with clear rules and bounded state spaces yield to numbers and self-play. AlphaGo proved that. But broad, transferable reasoning, the kind that lets you move between domains, construct arguments, and grasp nuance, seems to need language. Language is the only medium where general intelligence has emerged at scale.

AlphaGo can discover a move that no human in thousands of years of play had found. But it cannot write a single sentence about why that move matters. The more general the intelligence you want, the more you need language.

Beyond Natural Language: Transformers as Universal Pattern Engines

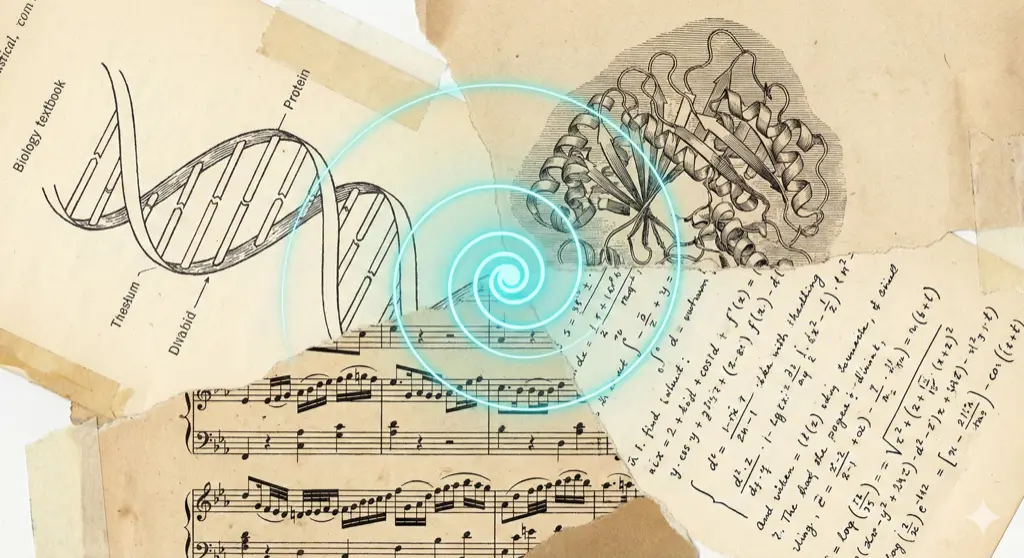

There’s another honest wrinkle. Transformers, the architecture behind LLMs, work remarkably well on things that aren’t natural language.

DeepMind’s AlphaFold used a transformer-based architecture to predict protein 3D structures from amino acid sequences, solving a fifty-year grand challenge in biology. Meta AI’s ESM-2, a protein language model with fifteen billion parameters, trained on 250 million protein sequences, predicts structure up to sixty times faster than AlphaFold. The technique is the same one BERT uses on text: predict masked amino acids, just as BERT predicts masked words. DNABERT treats the human genome as a language written in a four-letter alphabet. The Music Transformer generates compositions with long-term structure and coherence.

So maybe the key ingredient isn’t language specifically. Maybe it’s something more general: structured sequential patterns, any system where elements relate to each other in meaningful ways across long spans.

But what is that if not a language?

DNA is a language of biological instructions. Code is a language of computation. Protein folding follows a grammar. Musical notation is a language of temporal relationships. In every case, the transformer treats the domain’s data as a structured symbolic sequence. The same attention mechanism that models relationships between words also models relationships between amino acids, nucleotides, and notes.

The argument doesn’t weaken. It generalizes. Intelligence emerges from pattern recognition over structured symbolic systems. Natural language is the richest, most general, most battle-tested of those systems, because it encodes not just domain-specific patterns, but the patterns of reasoning itself. It is the language that contains all the others.

What This Means

If this line of thinking is right, it has implications beyond AI.

Writing quality shapes machine intelligence. LLMs are only as good as the text they learn from. Garbage reasoning in, garbage reasoning out. The clarity of our arguments, the rigor of our explanations, the precision of our prose: these are not just stylistic concerns. They are inputs to the next generation of machine intelligence. Writing well has always mattered. Now it matters in ways we never anticipated.

Libraries are cognitive infrastructure. They are not just repositories of knowledge. They are repositories of thinking patterns built over centuries. Every book, paper, and argument that survived is a training sample for how to reason. The Enlightenment, the scientific revolution, the slow accumulation of case law: all of it was building something machines could eventually learn from, though nobody planned it that way.

Conventional AI was philosophically wrong. It assumed intelligence could be specified, that enough rules, enough facts, enough logic would eventually produce understanding. By one estimate, Douglas Lenat spent forty years, thirty million assertions, two hundred million dollars, and two thousand person-years building CYC, the most ambitious attempt to manually encode common sense ever undertaken. It never reached intellectual maturity. Intelligence cannot be specified. It has to be absorbed, from the accumulated output of minds that came before.

Classic AI tried to write intelligence from scratch.

LLMs read an enormous portion of what humans have written, and intelligence emerged.

That difference is not a technical footnote. It’s the whole story.

References:

- Silver, D. et al. (2016). Mastering the game of Go with deep neural networks and tree search. Nature, 529, 484-489.

- Vaswani, A. et al. (2017). Attention Is All You Need. Advances in Neural Information Processing Systems.

- Jumper, J. et al. (2021). Highly accurate protein structure prediction with AlphaFold. Nature, 596, 583-589.

Ready to Automate Your Operations?

I write code. I deploy infrastructure. I train your team. This isn't advisory work. Every engagement includes capability transfer - you own what we build, and your team can maintain it. Production-ready systems in weeks, not months.

Sites Automated

Autonomous audit agent deployed across 77 client sites, freeing consultant time for strategic work.

Companies Scored

M&A enrichment pipeline processing trade show data, qualifying 155 priority targets in 2 weeks.

Spend Visibility

ERP to dashboard integration delivering unified supplier view and procurement analytics.